Building My First MCP Server: A Practical Guide with Google Routes API

Learn how to build your first Model Context Protocol (MCP) server by connecting a local LLM to the Google Maps API step by step.

I’ve been experimenting a lot with LLMs recently - and I keep coming up against the same hurdle: How can you connect your local AI with tools and data from the real world?

This is where the Model Context Protocol (MCP) comes into play.

I decided to learn it myself - here’s how I built a simple MCP server that allows my local AI to calculate driving routes using the Google Maps API.

What is the Model Context Protocol (MCP)?

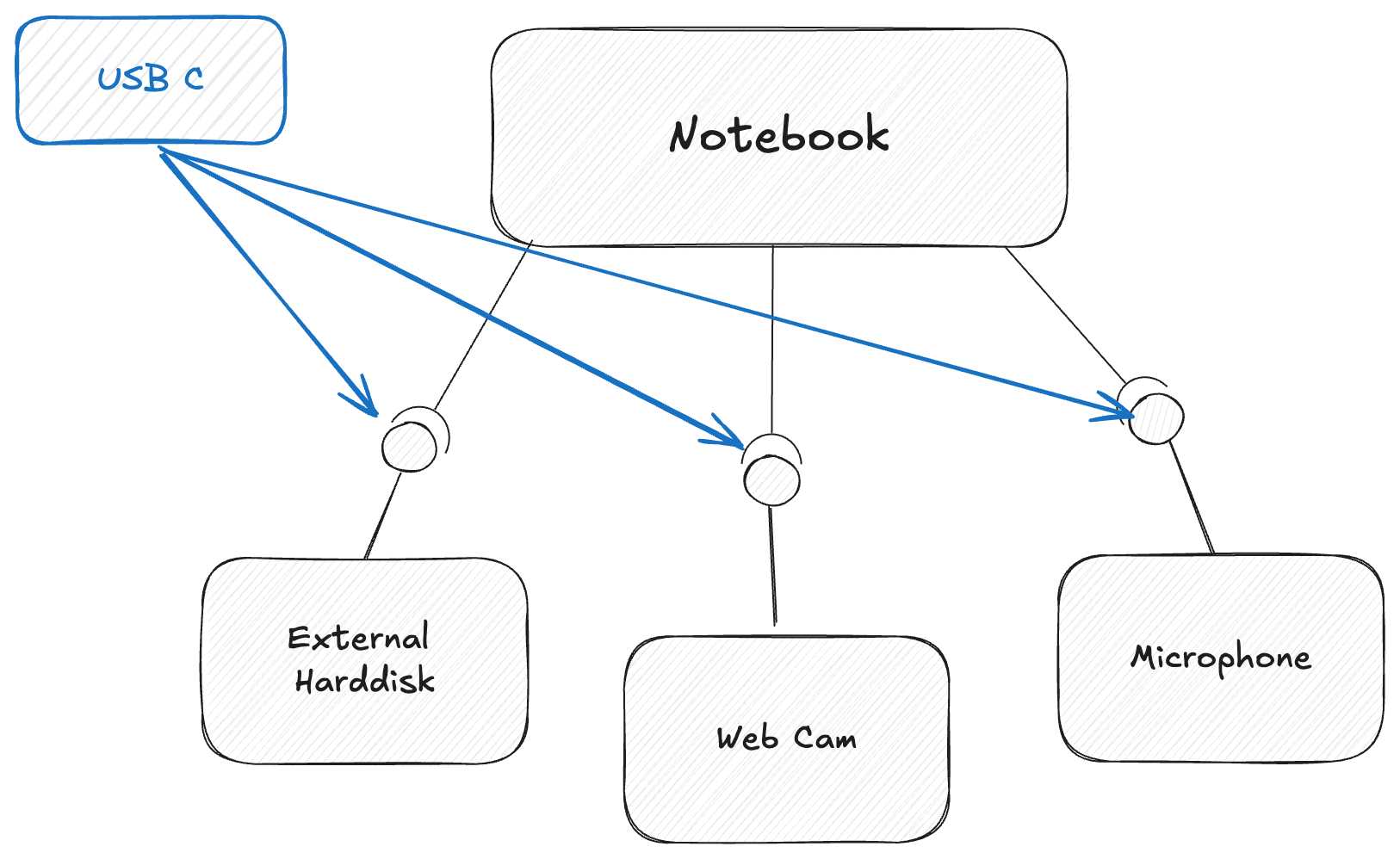

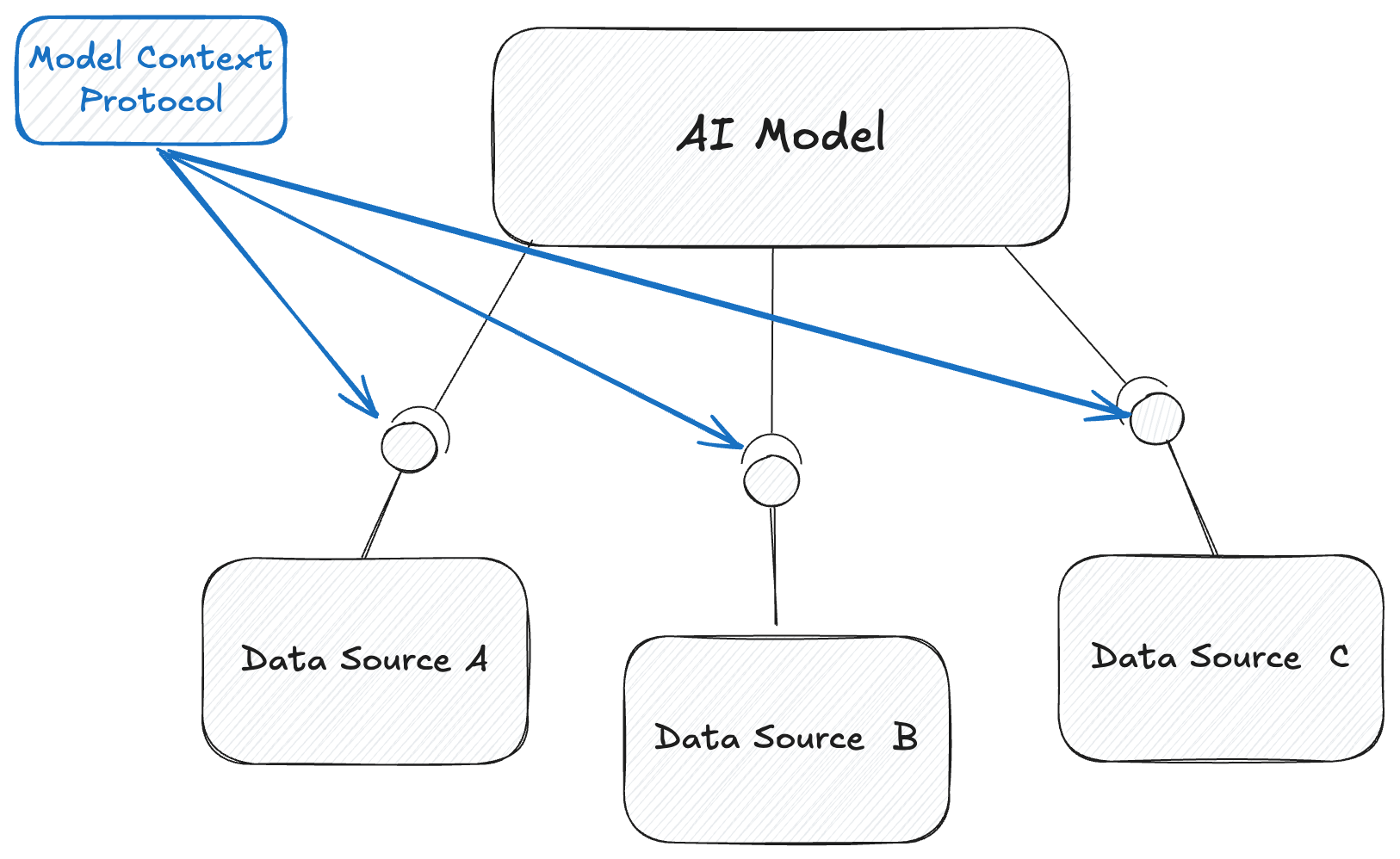

Everyone knows about USB-C. USB-C allows you to connect all your peripherals in a uniform, standardised way.

The model context protocol is exactly the same for your external services, allowing AI models to connect various external data sources and tools.

Why should you consider the Model Context Protocol from a business perspective?

If you have an AI and LLM-based application, there are a few reasons why you should definitely consider the Model Context Protocol for your business.

By using the MCP, you have the opportunity to gain the following added value

Faster product development and innovation for LLM based products

The MCP makes it easier for different AI models, tools or services to have a common context and work together smoothly. This reduces duplication of work (e.g. re-entering or re-training the same data) and speeds up the time-to-market of AI-powered products.

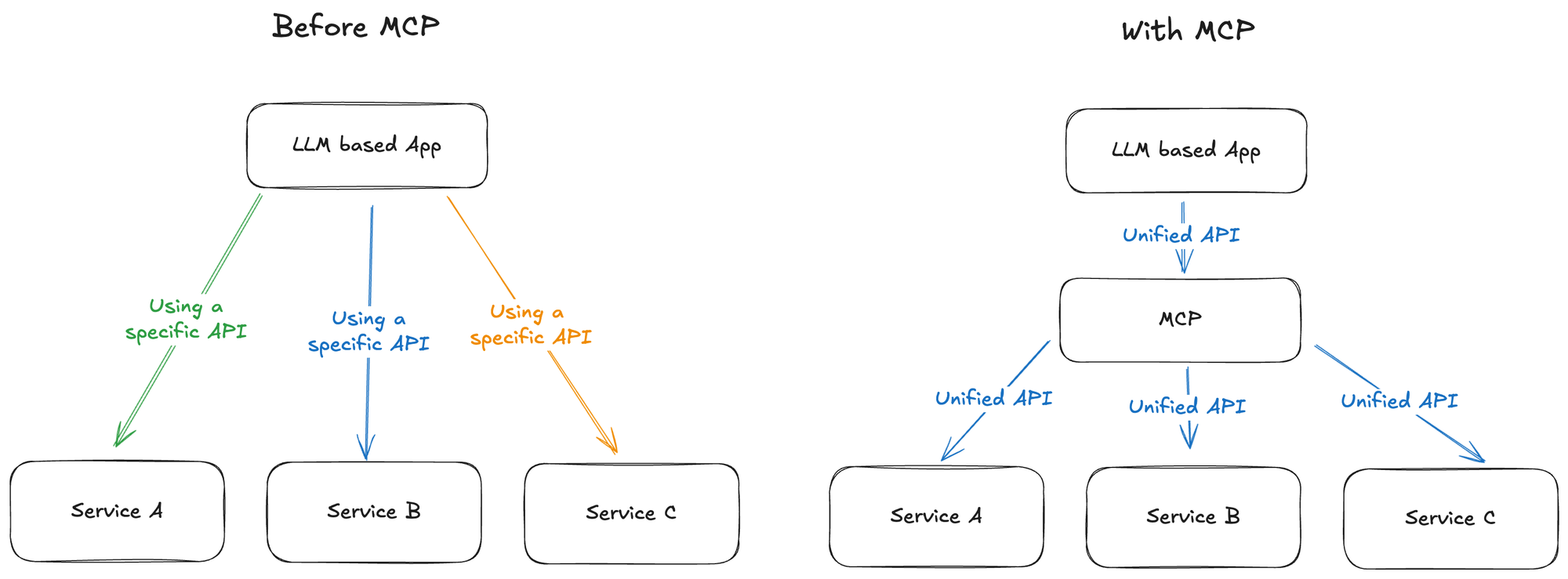

Better interoperability

MCP is like a “universal language” for the context between systems. It prevents vendor lock-in because you’re not tied to a monolithic AI or data platform. This flexibility protects your investments and allows you to swap tools as needed.

Higher quality decisions

When systems can share a broader, consistent context, your AI assistants, agents or workflows make fewer mistakes. This means less rework, a better user experience and more reliable automation. Greater accuracy leads to better results - be it in customer support, personalisation or knowledge management.

Fewer integration difficulties

Without a protocol like MCP, you have to create custom integrations for each tool or model to “understand” the common context. MCP standardises this so you spend less on glue code and maintenance. It reduces the cost and risk of integrating third-party services into your AI landscape.

New business models

With MCP, you can integrate third-party AI agents or services that speak the same “context language” This opens the door for marketplaces with specialised AI agents that work together. You could even market your own proprietary models or data as MCP-compatible services.Core elements of an MCP based architecture

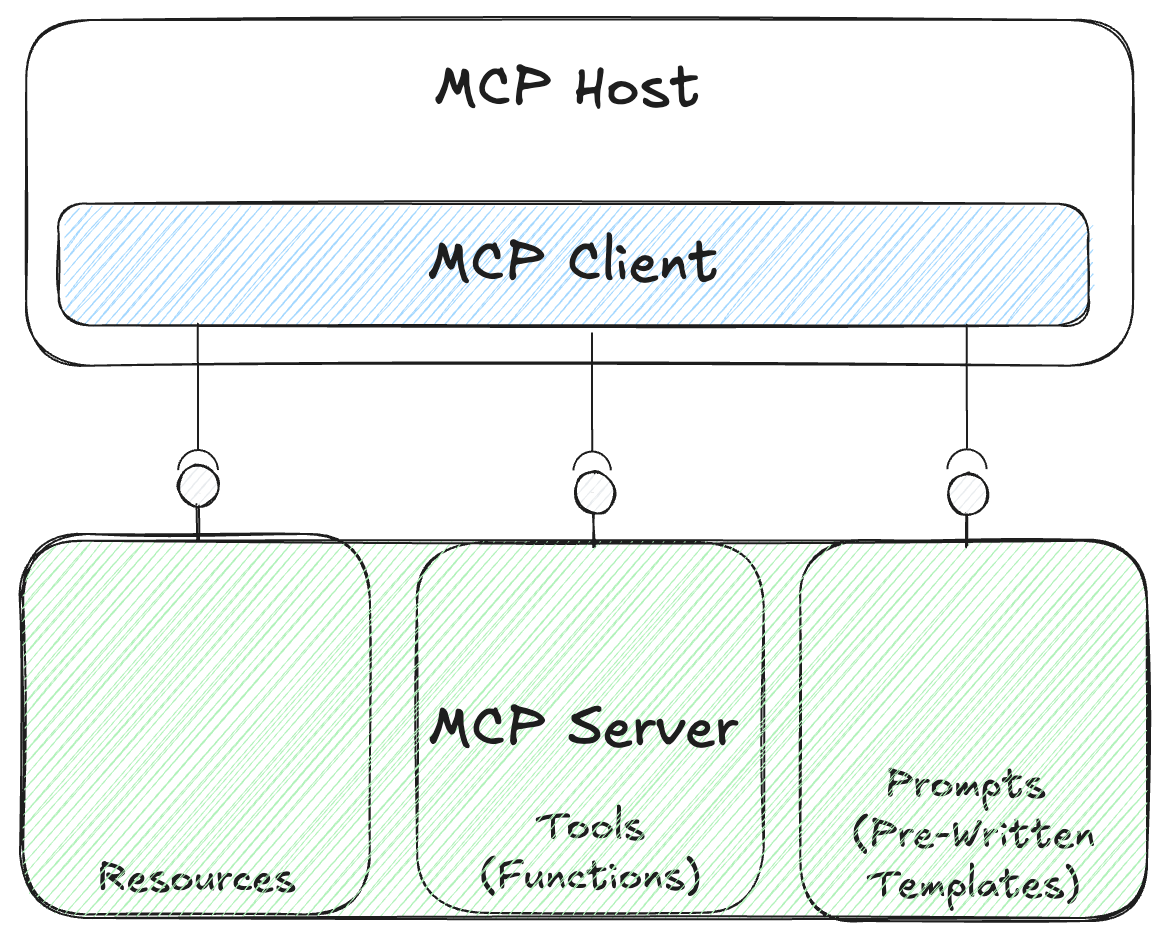

Components of an MCP application

The following components shows the typical elements of an MCP application:

MCP Hosts

Application with an LLM that wants to access external data to get more context.

Example MCP hosts are programs like Claude Desktop, IDEs, or AI Toolkits like LM Studio.

MCP Clients

MCP-based protocol clients that maintain a 1:1 connection with MCP servers.

MCP Servers

(Micro-)services or programmes that expose certain capabilities via the standardised model context protocol

Local and Remote Data Sources and Services

Services, databases, files, etc. that are securely accessible via the MCP protocol.

Building an example MCP server "Google Routes API MCP Server"

The context for the example was a route calculation function of the Google Maps API, that I recently discussed at a coffee table.

So I start with a simple MCP server that allows my local LLM to calculate routes.

My intention was to write a small MCP server that calls the Google Routes API and provides the local LLM with the function or tool to calculate routes.

Basically, I can do this with my local LLM:

How long does it take to drive from Lucerne to Zurich by car?

So let's start.

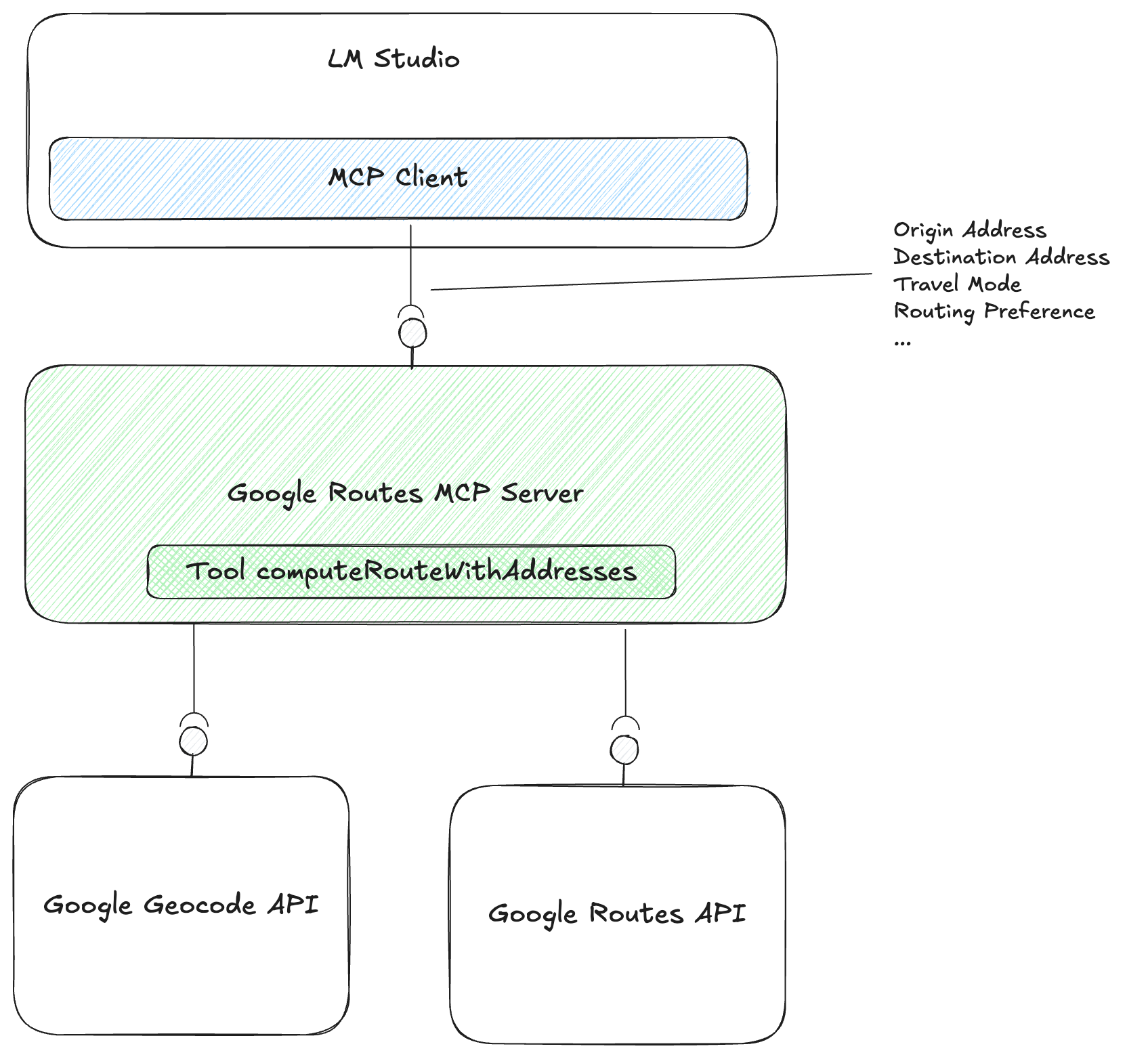

Architecture Overview

The following image shows the architectural overview of my learning prototype "Google Routes MCP Server":

The architecture consists of the following main components:

- Locally installed LM Studio, which acts as an MCP client for my Google Routes MCP Server

- The Google Routes MCP server provides the computeRouteWithAddress tool to compute routes based on the content of a specific prompt. The MCP server calls external APIs for this purpose: Google Geocode API and the Google Routes API.

- The Google Geocode API resolves an address into specific coordinates (longitude and latitude)

- Google Routes API, which calculates route information based on two addresses and some specific options

Initialisation of the TypeScript-based Google Routes MCP server

For initializing the MCP server I need the following:

3rd party dependencies

- The official Typescript SDK for Model Context Protocol (@modelcontextprotocol/sdk) for have everything you need to set up an MCP server

- Zod for the description and schema validation of the input parameters

- Axios for calling the HTTP based Google APIs

3rd party development tools

- Typescript & ts-node for executing

- Model Context Protocol Inspector for later troubleshooting and debugging the MCP server

Register the tool for the LLM

Let's create a server.ts in a src folder and start specifying the tool via the server object through the @modelcontextprotocol/sdk.

Let's call it computeRouteWithAddresses

To make a tool available for an LLM-based application with an MCP, you must register a tool. The registration works via the registerTool function on the mcp server object, which is provided by the @modelcontextprotocol/sdk package.

Defining the input params via Zod

After you have registered this tool, you should define the input parameters or the input scheme that the MCP client or the app can call. In our case, we definitely need the originAddress and the destinationAddress. Both fields can be defined via Zod. Zod is a validation library based on TypeScript.

So let's define the input schema via Zod.

Now we have the input params. Now we need to implement the logic of the MCP server.

Implementing the tool logic

We need two API calls for the Google Routes API MCP server. One for resolving an address into coordinates (for origin and destination) via the Google Geocode API and the other that calculates the route details (the Google Routes API).

In the following code snippet you will find the Google Geocode API (geocodeAddress) for resolving an address and calling the Google Routes API.

The idea is to resolve the two address strings entered (originAddress and destinationAddress) into coordinates (latitude and longitude object) and use them to calculate the routes.

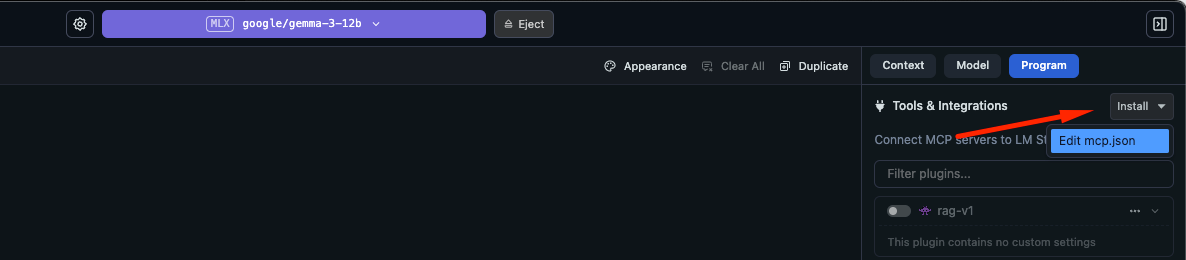

Register the tool in your AI toolkit / MCP Client

I use LM Studio as a local client to experiment with different OSS LLMs. It's a pretty cool local AI toolkit (free!).

To use the tool locally, I need two things:

- An LLM - I downloaded the latest Google LLM gemma and loaded the model

- The configuration of the tool via mcp.json

With the mcp.json you register your tools that you use with your local AI toolkit, which acts as an MCP client.

The following code snippet shows the mcp.json configuration for the locally registered tool.

In the background, your AI toolkit executes this tool locally with the installed node runtime.

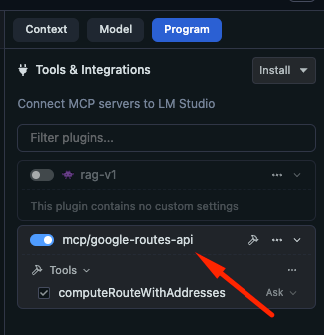

Finally, use the tool with your LLM in the AI toolkit

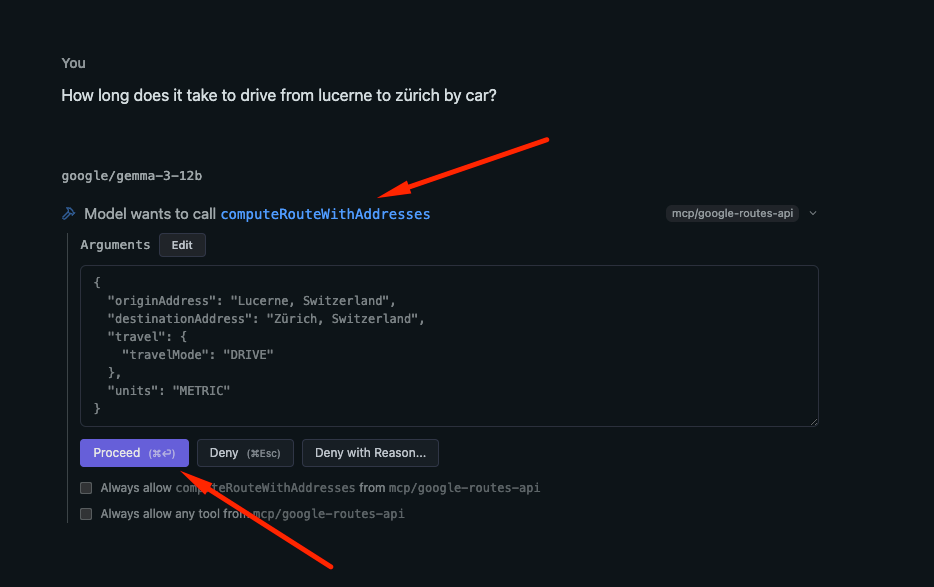

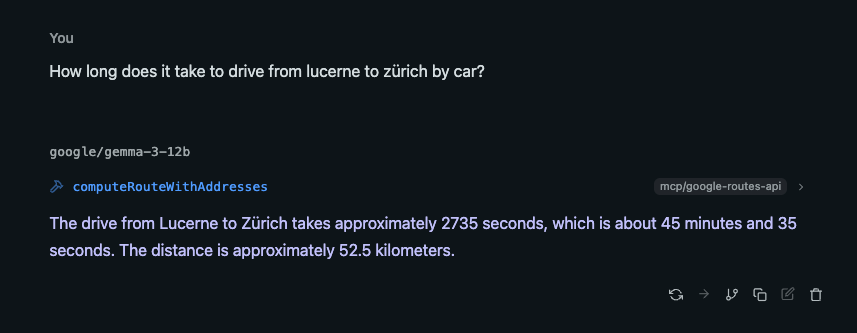

If the specific LLM is (in my example Google Gemma 3) and the mcp/google-routes-api is loaded, you can now write a prompt and send it.

E.g. "How long does it take to drive from Lucerne to Zurich by car?"

The LLM recognises that a tool called computeRouteWithAddress is registered and suggests calling the tool with the corresponding parameters.

You can agree to call this tool.

The tool is then called and the LLM or the respective MCP client receives the result and can integrate it into its context and answer your original question.

Lesson learnt

Here are a few lessons we learnt during the development of the prototype and the creation of the MCP server:

- MCP is a good way to bring LLMs closer to your data and services. It's definitely worth a try!

- Zod is a great way to perform schema and input validation. Add lots of appropriate descriptions to the parameters so they are understandable to the LLM.

- Pay attention to the size of the return size of your result. The more tokens you use, the longer the interaction will take.

- Use the Model Context Protocol Inspector to check your MCP server (a separate post would be appropriate) before bringing it to an MCP host

GitHub Repo

You can find the running Google Routes MCP server (protoype!) here:

Comments ()